Abstract

Generative artists have started to engage the poetic and expressive potentials of film playfully and efficiently, with explicit or implicit critique of cinema in a broader cultural, economic and political context.

This lecture and paper look at the creative approaches and incentives within generative cinema as one of the emerging fields of digital art. These approaches and incentives in different ways point to the open algorithmic concepts for freely, parametrically, analytically and/or synthetically generating the cinematic structure, narrative, composition, editing, presentation and interaction.

Their algorithmic essence also provides a platform for critical understanding of the strategies such as market analysis, target group research, script evaluation, and box-office assessment in contemporary film industry.

Keywords: Algorithm, Cinema, Creative Coding, Digital Art, Film, Generative Art, Generative Cinema, Programming.

I gave this lecture at Consciousness Reframed 2016 conference at DeTao/SIVA in Shanghai 2016, Generative Art 2016 conference in La Fondazione Cassa di Risparmio di Firenze in Florence 2016, Going Digital conference in the Museum of Applied Arts in Belgrade 2016, and at Computer Graphics Meet Up in Crater VFX Studio in Belgrade 2016.

Introduction

This paper is part of the extensive artistic and theoretical research in generative art and its broader context. It is motivated by the observation that there exist complex connections between the creativity in cinematography and the procedural fluency which is essential in generative art. These connections have been targeted implicitly or explicitly by the artists of generative cinema but remain virtually untouched in theoretical discourse. The film studies are traditionally focused on the historical, narrative, formal, aesthetical and political aspects of the relations between cinema, technology, culture, media and other art forms. The theoretical studies in new media art primarily address these relations on the conceptual, material and phenomenological level, investigating and comparing how the different references of information are captured, stored, manipulated, retrieved and perceived in film and in digital media. In Cinema and the Code (1989), Gene Youngblood anticipates the creative potentials of the algorithmic foundation of code-based processing of the formal elements in film, but never explicates them.[1]

This paper explores generative cinema by discussing the successful and thought-provoking art projects which represent the relevant approaches toward cinema in generative art and exemplify the artists’ abilities to transcend the conceptual, expressive and aesthetic limits of code-based art. The theme is observed primarily from the aspect of the artists’ creative thinking and critical evaluation, with the aim to show that the cognitive tensions between film and generative art have significant expressive, intellectual and ethical implications which could benefit both fields. The aim of the paper is also to encourage and open up the possibilities for further theoretical and practical research in generative cinema.

The statements in this paper are based on a combination of the literature review (which includes theoretical texts, media art histories, catalogues, articles and web sites in relevant areas) and the author’s experience working as an artist, curator and educator in the field of new media. The concrete knowledge of methodologies, procedures, requirements and limitations of the actual artistic practice is rarely reflected and/or utilized in theoretical texts which are predominantly based on the analysis of other texts. It is, however, an invaluable asset that sharpens the critical edge, improves the efficiency of reasoning and the depth of understanding in theoretical work. This special viewpoint both enables and requires the author to try and bring the theory and the practice together in a more comprehensive way.

Generative Cinema

The immense poetic and expressive potentials of film have been barely realized within the cinematic cultural legacy, mainly due to industrialization, commercialization, politicization and consequent adherence to the pop-cultural paradigms.[2] Unrestrained by the commercial imperatives, motivated by the unconventional views to film, animation and art in general, generative artists have started to engage these potentials playfully and efficiently, with explicit or implicit critique of cinema in a broader cultural, economic and political context.

The conceptions of generative art in contemporary discourse differ by inclusiveness.[3][4][5][6][7][8] In this paper, generative art is perceived broadly, as a heterogeneous realm of artistic approaches based upon combining the predefined elements with different factors of unpredictability in conceptualizing, producing and presenting the artwork, thus formalizing the uncontrollability of the creative process, underlining and aestheticizing the contextual nature of art.[9][10] Consequently, generative cinema is understood as the development and application of generative art methodologies in working with film both as a medium and as the source material.

Generative cinema has been one of the emerging fields of digital art in the past twenty years. Before that, generative techniques had been seldom explored in both conventional and experimental film.[11][12][13] As a logical extension of generative animation,[14] generative cinema in digital art became feasible with the introduction of affordable tools for digital recording and editing of video and film. It expanded technically, methodologically and conceptually with the development of computational techniques for manipulating large number of images, audio samples, indexes and other types of relevant film data. Diversifying beyond purely computation-based generativity—which drew considerable and well deserved criticism[4][7] and is still widely recognized as the generative art—the production of generative cinema unfolds into a number of practices with different poetics and incentives. Here are some examples.

Supercut

Cristian Marclay’s Telephones (1995) used supercut as a generative mixer of conventional cinematic situations involving phone calls. Supercut is an edited set of short video and/or film sequences selected and extracted from their sources according to at least one recognizable criterion. It inherited the looped editing technique from Structural film which was popular in the US during the 1960’s and developed into the Structural/Materialist film in the UK in the 1970’s. Focusing on specific words, phrases, scene blockings, visual compositions, camera dynamics, etc., supercuts often accentuate the repetitiveness of narrative and technical clichés in film and television.

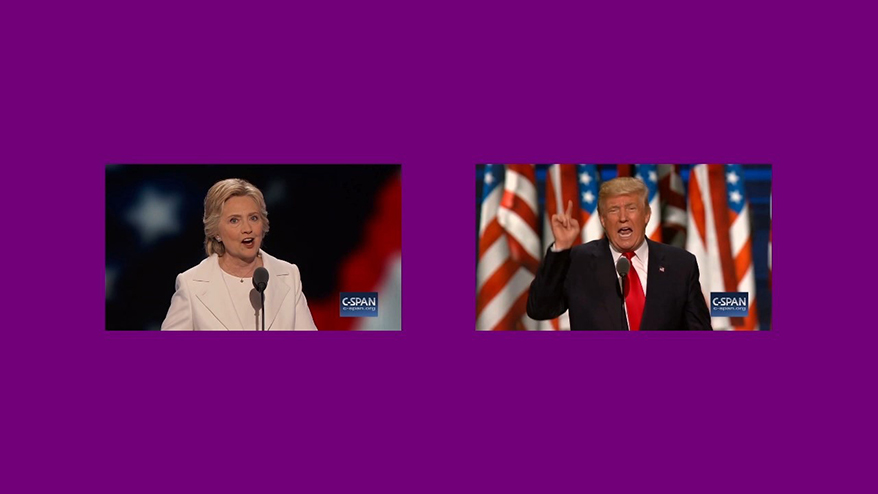

With the explosion of online video sharing, supercut became a pop-cultural genre but remained a potent artistic device, for example in the installations Every Shot, Every Episode (2001) and Every Anvil (2002) by Jennifer & Kevin McCoy, in Tracey Moffatt's pop-cultural theme explorations such as Lip (1999), Artist (2000) and Love (2003 with Gary Hillber), and in Marco Brambilla’s Sync (2005). It was added a witty existential flavor in Kelly Mark’s post-conceptual, post-media works REM (2007) and Horroridor (2008). It was charged with political and meta-political critique in R. Luke DuBois’ brilliant projects Acceptance (2012) and Acceptance 2016 (2016), the two-channel video installations in which the acceptance speeches given by the two major-party presidential candidates (Obama and Romney in 2012, Clinton and Trump in 2016) are continuously synchronized to the words and phrases each of them speaks, which are 75-80% identical but distributed differently.

The conceptual and technical logic of supercut received a fundamental critical assessment with Sam Lavigne’s Python applications Videogrep (2014), which generates supercuts by using the semantic analysis of video subtitles to match the segments with selected words, and Audiogrep (2015), which transcribes audio files and creates audio supercuts based on the input search phrases.

Statistical

Classification, indexing and systematic quantification of formal qualities in time-based media allow for building databases which can be handled and manipulated with statistical tools. This enables the artists to make alternative visualizations and temporal mappings that reveal the overall visual and structural logic of popular films.

The idea of unconventional editing and presentation of film has been explored in a number of projects. Soft Cinema: Navigating the Database (2002-2003) by Lev Manovich and Andreas Kratky demonstrates Manovich’s view of the cinema as a digital (discrete) medium and of the film as a database. The project was based on classifying and tagging a set of stored video clips, algorithmically creating the editing scenarios in real-time, and on devising a user interface for arranging, navigating and playing the material.[15]

Daniel Shiffman’s video wall Filament (2008) continuously animates and shifts the sequence of 1400 frames (50 seconds) from Tom Tykwer’s Run Lola Run (1998). Programmed manipulation of digitized film also enables the artists to statistically process the films frame by frame, for example in Ben Fry’s Disgrand (1998), in Ryland Wharton’s Palette Reduction (2009), and in Jim Campbell’s Illuminated Average Series (2000-2009) which averages and merges all the frames from Orson Welles’ Citizen Kane (1941) and Hitchcock’s Psycho (1960).[16]

In Portrait (2013), Shinseungback Kimyonghun used computer vision in the statistical style of Jim Campbell and Jason Salavon. The software detects faces in every 24th frame of a selected movie, averages and blends them into one to generate facial identities that indicate the dominant portraits of selected movies, stressing the figurative paradigm in mainstream cinema.[17]

The classic conceptual, formal and experiential form of infographic processing of film was achieved in Frederic Brodbeck’s graduation project Cinemetrics (2011). The core of the project is a Python-based online application for interactive visualization and analysis of the loaded films according to a number of criteria such as duration, average luminance and chromatic values, number of cuts, dynamics of movement in sequences, comparisons between different genres, original film versions vs. remakes, films by the same director, films by different directors, etc.[18]

Crowdsourced

As an old method for outsourcing complex, iterative or otherwise demanding projects to many participants who are expected to make relatively small contributions, crowdsourcing has significantly evolved with the Internet (and has often been skillfully exploited), from the SETI@home screensaver in the early WWW, to FoldIt, Kickstarter, Wikipedia, CAPTCHA, social networking and social media platforms.

In Man with a Movie Camera: The Global Remake (2008) Perry Bard combines online participation with automatic selection of crowdsourced video clips to make a properly ordered shot-by-shot interpretation of Dziga Vertov’s eponymous seminal film Человек с киноаппаратом (1929). A similar idea, the surrealist ‘exquisite corpse’ method for sequential collaging of found video clips, is behind João Henrique Wilbert’s Exquisite Clock (2009) which constructs the digital clock with six screens showing the uploaded users’ free-style photographic interpretations of decimal digits. In Rafael Lozano Hemmer’s installation Nineteen Eighty-Four (2014) a software robot continuously extracts and displays the digits 1, 9, 8 and 4 from the images of street numbers in Google Maps.

With The Pirate Cinema (2012-2014) Nicolas Maigret brings the real-time robotic sampling of film to the world of peer-to-peer exchange. The installation uses a computer that constantly downloads the 100 most viewed torrents on a tracker website, intercepts the currently downloading video/audio snippets, projects them on the screen with the information on their origin and destination, discards them and repeats the process with the next stream in the download queue.[19]

The idea of expanding the conventional film structure with crowdsourced, programmatically arranged and interactively manipulable contents was polished up and designed to consequently reflect the logic of online video sharing in Jono Brandell and George Michael Brower’s Life in a Day Touchscreen Gallery (2011). It is a highly configurable platform for organizing, sorting and screening the clip selections of all the 80,000 short video submissions to a traditionally scripted and edited crowdsourcing film Life in a Day (dir. Kevin Macdonald, 2010) which used around 10,000 selected video clips. The fact that Touchscreen Gallery was a sideshow instead of being central to the Life in a Day project reflects the dominant ideology of mainstream cinema.

Deanimated

One of the most impressive critical deconstructions of the structural and audio-visual conventions in cinema was achieved by Martin Arnold with Deanimated (2002). He successively removed both visual and sonic manifestations of the actors in the 1941 Joseph H. Lewis’s B thriller The Invisible Ghost, and then consistently retouched the image and sound so that in the final 15 minutes the film show only the empty spaces accompanied by the crackling of the soundtrack.[20][21]

Martin Arnold, Deanimated, 2002: corresponding stills from Invisible Ghost (left) and Deanimated (right).

Similarly motivated to avoid the figurative and narrative dictates in film tradition, Vladimir Todorović combines generative animations with voice over narration and ambient soundtrack in The Snail on the Slope (2009), Silica-esc (2010) and 1985 (2013). The 1985 is an abstract rendition of the fictional activities of the ministries of Peace, Love, Plenty and Truth that govern Oceania one year after the events in George Orwell’s 1984 (1949). Its uncanny ambience relies on the sudden changes of sound and image, triggered by the random walk algorithm which was modified with cosine function, accelerated and decelerated.[22]

Documentary narrative structure can also be transcended, for example in Jonathan Minard and James George’s computer film CLOUDS (2015) which dynamically links real-time generative animations and sound with pre-recorded documentary footage.

Condensed

In Fast Film (2003), Virgil Widrich intelligently expanded the possibilities for reproducing and interpreting the film snippets in order to accentuate the fascinations, obsessions and stereotypes of conventional cinema. Fast Film was created by paper-printing the frames from selected film sequences, reshaping, warping and tearing them up into new animated compositions. In its exciting 14 minutes of runtime, Fast Film provides an elegant and engaging critical condensation of the key cinematic themes such as romance, abduction, chase, fight and deliverance.

Nine years later, György Pálfi exploits this narrative methodology, along with the achievements of supercut art and culture, to produce a feature length movie Final Cut: Ladies and Gentlemen (2012) out of the short sequences from 450 popular films and cartoons. Although it proved to be barely watchable in continuity due to the fundamental incompatibility between rapid editing of incoherent imagery and long running time, the film critics praised it as ‛an ode to cinema’.[23]

Synthesized

The concept of real-time procedural audiovisual synthesis from the arbitrary sample pool, in contrast, elevates the film structure by following the essential logic of cinema. It was achieved by Sven König in sCrAmBlEd?HaCkZ! (2006) which uses the psychoacoustic techniques to calculate the spectrum signatures of the sound snippets from the stored video materials and saves them in a multidimensional database that is searched in real-time to mimic any input sound by playing the matching audio snippets and their corresponding videos.[24] Perhaps this innovative project was largely overlooked because König used the sCrAmBlEd?HaCkZ! software mainly for VJ-ing rather than for developing complex artworks by establishing the specific relations between the sources of stored and input materials.

Procedural audiovisual synthesis was advanced through the application of neural networking and machine learning by Parag Kumar Mital in YouTube Smash Up (from 2012). Each week, this online software takes the #1 YouTube video of the week and resynthesizes it using an algorithm that collages the appropriate fragments of sonic and visual material coming only from the remaining Top 10 YouTube videos.[25][26] It produces a surreal animated effect, visually resembling Arcimboldo’s grotesque pareidolic compositions.[27]

The more demanding, machine-based synthesis of coherent film structure and plausible narrative was tackled by Oscar Sharp and Ross Goodwin in Sunspring (2016). It was their entry to the 48-Hour Film Challenge of the Sci-Fi London film festival. Experienced in language hacking (natural language processing) and neural networks, Goodwin programmed a long short-term memory recurrent neural network and, for the learning stage, supplied it with a number of the 1980s and 1990s sci-fi movie screenplays found on the Internet. The software, which appropriately ‘named’ itself Benjamin, generated the screenplay as well as the screen directions around the given prompts, and Sharp produced Sunspring accordingly.

The film brims with awkward lines and plot inconsistencies, but it qualified with the top ten festival entries, and inspired one of the judges to say “I’ll give them top marks if they promise never to do this again.”[28] Sunspring playfully reverses the ‘Deep Content’ technology of What is My Movie web service, which analyzes transcripts, audiovisual patterns and any form of data-feed that describes the video content itself, automatically converts it into advanced metadata which is then processed by a machine learning system that matches the metadata with the natural language queries.[29]

A Void Setup

All these approaches in generative cinema point to the powerful algorithmic concepts for freely, parametrically and/or analytically generating the cinematic structure, narrative, composition, editing, presentation and interaction. One such concept proposes a flexible system for automatic arrangement of the manually tagged film clips, or their arrangement according to input parameters.[30] A more complex one would be able to combine the computer vision, semantic analysis and machine learning to recognize various categories and reconstruct plots from a set of arbitrarily collected shots, sequences or entire films, and to transform and reconfigure these elements according to a wide range of artist-defined criteria that substantially surpass those in conventional film.

The algorithmic tools of generative cinema significantly expand the realm of creative methodologies for the artists working with film and animation. They empower the artists to gain insight into conceptual, formal and expressive elements of film and animation, and to enhance them through experimentation. Furthermore, the algorithmic principles of the successful generative cinema artworks, regardless of their technical transparency, can be inferred, repurposed and developed into new projects with radically different poetic identities and outcomes. These creative capacities also provide a specific context for the critical assessment of conventional film.

Like with the earlier trendy ideas that it had clumsily borrowed or re-purposed from the avant-garde, mainstream cinema has been systematically exploiting some aesthetic effects and themes of digital generative art, with little understanding of the intellectual values behind generative methodologies. This superficial exploitation is revealed in the goofs spotted by the adequately informed members of the audience. When the commercial film tries to utilize algorithms as creative tools, it does so ineptly and ineffectually, reflecting its rigid ideology as exemplified in this paper by Macdonald’s Life in a Day and Pálfi’s Final Cut.

The algorithmic strategies that film industry applies successfully are those for conceptualization, script evaluation, box-office assessment and other business-related aspects of production, distribution and marketing. Major production companies, such as Relativity Media in Hollywood, use statistical processing of screenplay drafts, while consulting services, such as Epagogix, offer their clients the big-data-based predictions of their films’ market performance.[31][32][33] The outcries over the ultimate loss of creativity, provoked by the media disclosures on these practices are, however, either naive or cynical because business-related algorithms have always been integral to the big-budget filmmaking.

This might have become more obvious since Hollywood’s funding shifted towards investment banks, stock-brokerage firms and hedge funds, but algorithmization is a logical consequence of the business strategies, hierarchies and conservativism of film industry. The formulaic screenplay design that uses variables such as genre, theme, narrative elements, and principal actors was already prevalent in Hollywood in the 1930’s. It was illustrated by Luis Buñuel’s predictive algorithm—a synoptic table of the American cinema: "There were several movable columns [...]; the first for ‘ambience’ (Parisian, western, gangster, war, tropical, comic, medieval, etc.), the second for‘ epochs,’ the third for ‘main characters,’ and so on. Altogether, there were four or five [tabbed] categories. [...] I wanted to [...] show that the American cinema was composed along such precise and standardized lines that [...] anyone could predict the basic plot of a film simply by lining up a given setting with a particular era, ambience, and character."[34]

Contemporary film industry shares much of the dogmatism with the 1930’s Hollywood. It is evident in the unquestionable dominance of storytelling over event, figuration over abstraction, explanation over ambience and certitude over ambiguity, in recycling motifs and themes, in exploiting the aesthetics of comics, videogames, music videos, television and visual arts, in remaking, serialization and franchising, in reducing the technical innovations to the routine tools for streamlining production, etc. This dogmatism shapes the agendas of commercial film and forces it to employ the algorithms in simplistic, mechanical and unimaginative ways.

Struggling with competitive new media and art forms, the film industry today is unable to transcend and unwilling to hide its fundamentally commercial motivation which relies on communicating a subset of human universals.[35] Therefore, it runs its business more consciously and rationally, focusing the algorithms on market analysis, target group research, risk-assessment, and screenplay design, all the way to the test-screening evaluations corresponding to the debugging procedures in computer coding. While this pragmatic algorithmization seems logical, it is creatively counterproductive and a global mass-market film industry could benefit from generative cinema only if it takes certain commercial risks and opens up for the experimental incentives of its creative talents.

Unrestrained by the commercial imperatives, motivated by the unconventional views on film, animation and art in general, generative artists develop new approaches and methodologies which can be advanced and repurposed by other artists. They inspire our amazement with the moving image, and at the same time broaden our critical understanding of the cinema as cultural product. With incomparable inventiveness and efficiency, they explore and expand the key conceptual, formal and narrative potentials of animation and film, reminding us that conventional cinema is just a subset of the divergent medium of the moving image. All these creative layers have been accessible to film artists since the invention of cinema but were seldom explored, primarily on the edges of experimental production. In this regard, generative cinema is becoming the supreme art of the moving image in the early 21st century. Its poetic versatility, technical fluency and expressive cogency demonstrate that the authorship evolves toward ever more abstract reflection and cognition which equally treat the existing creative achievements as inspirations, sources of knowledge and tools.

-

Youngblood, Gene. “Cinema and the Code.” Leonardo, Computer Art in Context Supplemental Issue, (1989): 27-30. ↩

-

Benjamin, Walter. 2008. The Work of Art in the Age of Its Technological Reproducibility in “The Work of Art in the Age of Its Technological Reproducibility, and Other Writings on Media.” Cambridge, MA / London: The Belknap Press of Harvard University Press. ↩

-

Galanter, Philip. 2003. "What is Generative Art? Complexity Theory as a Context for Art Theory" in GA2003: VI Generative Art Conference Proceedings, edited by Celestino Sodu. Milan: Domus Argenia Publisher: 225-245. ↩

-

Arns, Inke. 2004. "Read_me, run_me, execute_me. Code as Executable Text: Software Art and its Focus on Program Code as Performative Text" in Medien Kunst Netz. ↩

-

Quaranta, Domenico. 2006. "Generative (Inter)Views: Recombinant Conversation With Four Softwareʼs Srtists." in C.STEM. Art Electronic Systems and Software Art, edited by Domenico Quaranta. Turin: Teknmendia. ↩

-

Boden, Margaret, and Ernest Edmonds. 2009. "What is Generative Art." Digital Creativity 20, No. 1-2: 21-46. ↩

-

Watz, Marius. 2010. "Closed Systems: Generative Art and Software Abstraction." in MetaDeSIGN - LAb[au], edited by Eléonore de Lavandeyra Schöffer, Marius Watz and Annette Doms. Dijon: Les presses du réel. ↩

-

Pearson, Matt. 2011. Generative Art. Shelter Island, NY: Manning Publications. ↩

-

Dorin, Alan, Jonathan McCabe, Jon McCormack, Gordon Monro and Mitchell Whitelaw. “A Framework for Understanding Generative Art.” Digital Creativity, 23, (3-4), (2012): 239–259. ↩

-

Grba, Dejan. 2015. “Get Lucky: Cognitive Aspects of Generative Art.” in GA2015: XIX Generative Art Conference Proceedings, edited by Celestino Sodu. Venice: Fondazione Bevilacqua La Masa: 200-213. ↩

-

Leggett, Mike. “Generative Systems and the Cinematic Spaces of Film and Installation Art.” Leonardo, Volume 40, Number 2, April, (2007): 123-128. ↩

-

Hansen, Mark B. N. 2015. Digital Technics Beyond the “Last Machine”: Thinking Digital Media with Hollis Frampton (Modulating Movement-Perception)em> in “Between Stillness and Motion: Film, Photography, Algorithms.” edited by Eivind Røssaak. Amsterdam: Amsterdam University Press: 45-72. ↩

-

See Youngblood [1]. ↩

-

Montfort, Nick et al. 2012. 10 PRINT CHR$(205.5+RND(1));: GOTO 10. Cambridge, MA: The MIT Press. ↩

-

Manovich, Lev and Andreas Kratky. 2005. Soft Cinema: Navigating the Database. Cambridge, MA: The MIT Press. ↩

-

Campbell, Jim. 2000/2009. “Illuminated Average Series.” Jim Campbell. ↩

-

Shinseungback Kimyonghun. 2013. “Portrait.” Shinseungback Kimyonghun. ↩

-

Cox, Geoff. “Real-Time for Pirate Cinema.” PostScriptUM, No. 20, Aksioma Institute for Contemporary Art (2015). ↩

-

Cahill, James Leo. “...and Afterwards? Martin Arnold’s Phantom Cinema.” Cinema, Special Graduate Conference Issue, Spectator 27: Supplement (2007): 19-25. ↩

-

Matt, Gerald and Thomas Miessgang. 2002. Martin Arnold: Deanimated. Vienna: Kunsthalle Wien / Springer Verlag. ↩

-

Q. P. Final Cut - Ladies and Gentlemen, an Ode to Cinema. Festival de Cannes, 2012. ↩

-

Mital, Parag Kumar. 2012. YouTube Smash Up. Parag Kumar Mital website. ↩

-

Mital, Parag Kumar. 2014. Computational Audiovisual Scene Synthesis. Ph.D. thesis, Arts and Computational Technologies Goldsmiths, University of London. ↩

-

Pagden, Sylvia Ferino. 2007. Arcimboldo. Milano: Skira. ↩

-

Newitz, Annalee. “The Multiverse / Explorations & Meditations on Sci-Fi.” Ars Technica, Jun 9, 2016, ↩

-

Valossa. 2016. “Description of Research.” What Is My Movie. ↩

-

Berga, Quelic Carreras. “Code as a Medium toReflect, Act and Emancipate: Case Study of Audiovisual Tools that Question Standardised Editing Interfaces.” 1st International Conference Interface Politics, (2016). ↩

-

Jones, Chris. “Ryan Kavanaugh Uses Math to Make Movies.” Esquire, Nov 19, (2009). ↩

-

Barnes, Brooks. “Solving Equation of a Hit Film Script, With Data.” The New York Times, May 5, (2013). ↩

-

Smith, Stacey Vanek. “What’s Behind the Future of Hit Movies? An Algorithm.” Marketplace, July 19, (2013). ↩

-

Buñuel, Luis. 1985. My Last Breath. London: Fontana Paperbacks: 131-133. ↩

-

See Brown, Donald E. 1991. Human Universals. New York, NY: McGraw-Hill. ↩

Going Digital: Innovation In Art, Architecture, Science and Technology Conference Proceedings. Belgrade: STRAND: 205-211.

Generative Art 2016 Conference Proceedings. Florence: La Fondazione Cassa di Risparmio di Firenze, 2016: 155-166.

Consciousness Reframed 2016 Conference Proceedings. Technoetic Arts: A Journal of Speculative Research. Bristol: Intellect, 2017.